This study documents several case studies undertaken by Forensic Architecture researchers and our growing network of open source software (OSS) contributors, into human rights and machine learning, with a particular focus on applications of ‘synthetic’ image data.

This research is ongoing, and follows on from research we released in early 2019. You can get involved by contributing on Github, and joining our Discord channel, where our researchers and OSS contributors coordinate, discuss, and deepen our existing research.

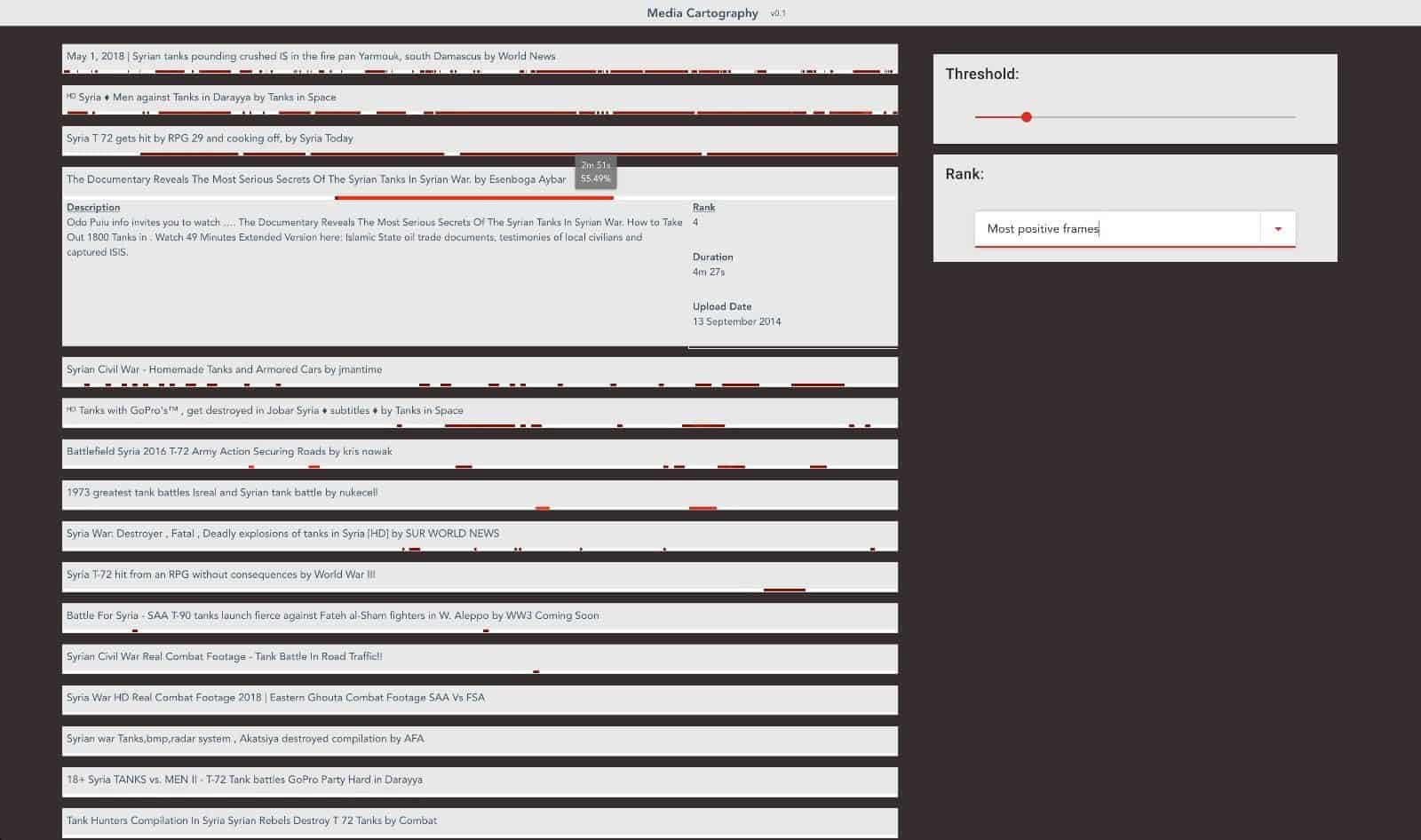

Machine learning applications at Forensic Architecture are geared towards triaging large volumes of open source data in order to find moments within image, audio, or video that are relevant to a given investigation and warrant further inspection. We developed an open source tool, Mtriage, for this purpose. (For an in-depth presentation of Mtriage and the way we use it at Forensic Architecture, see our post ‘Computer Vision in Triple Chaser‘.)

After introducing our open source initiative in machine learning in ‘Triple Chaser’, in December 2019 we published a technical paper at NeurIPS detailing the approach in greater depth. In early 2020, we introduced public-facing bi-weekly development cycles for Mtriage.

The goal of our machine learning research in 2019 has been twofold:

1. To test the viability of both mtriage as a practicable workflow in human rights research.

2. To confirm that synthetic data offers an alternative mode of training effective computer vision classifiers (which can then be deployed in mtriage).

To reach these two objectives, we have focused on generalising from the task of classifying Triple-Chaser grenades to classifying 37-40mm tear gas canisters in images. (37-40mm is a globally-utilised standard for tear gas munitions, manufactured for use throughout the world.)

As part of our contribution to ‘Uncanny Valley’, at San Francisco’s de Young Museum, this study presents three recent updates to our machine learning research:

- Rewriting our synthetic data generation engine with Unity.

- Training classifiers to detect 37-40mm Norinco canisters: using synthetic data, and experimentally with few shot detection.

- Preliminary experiments in audio analysis for event detection.

Generating synthetic data with Unity

Our first attempts at generating synthetic training data from 3D models used Cinema4D. During research for ‘Triple-Chaser’, we switched to Unreal Engine, for its built-in procedural defaults and greater cinematic flexibility.

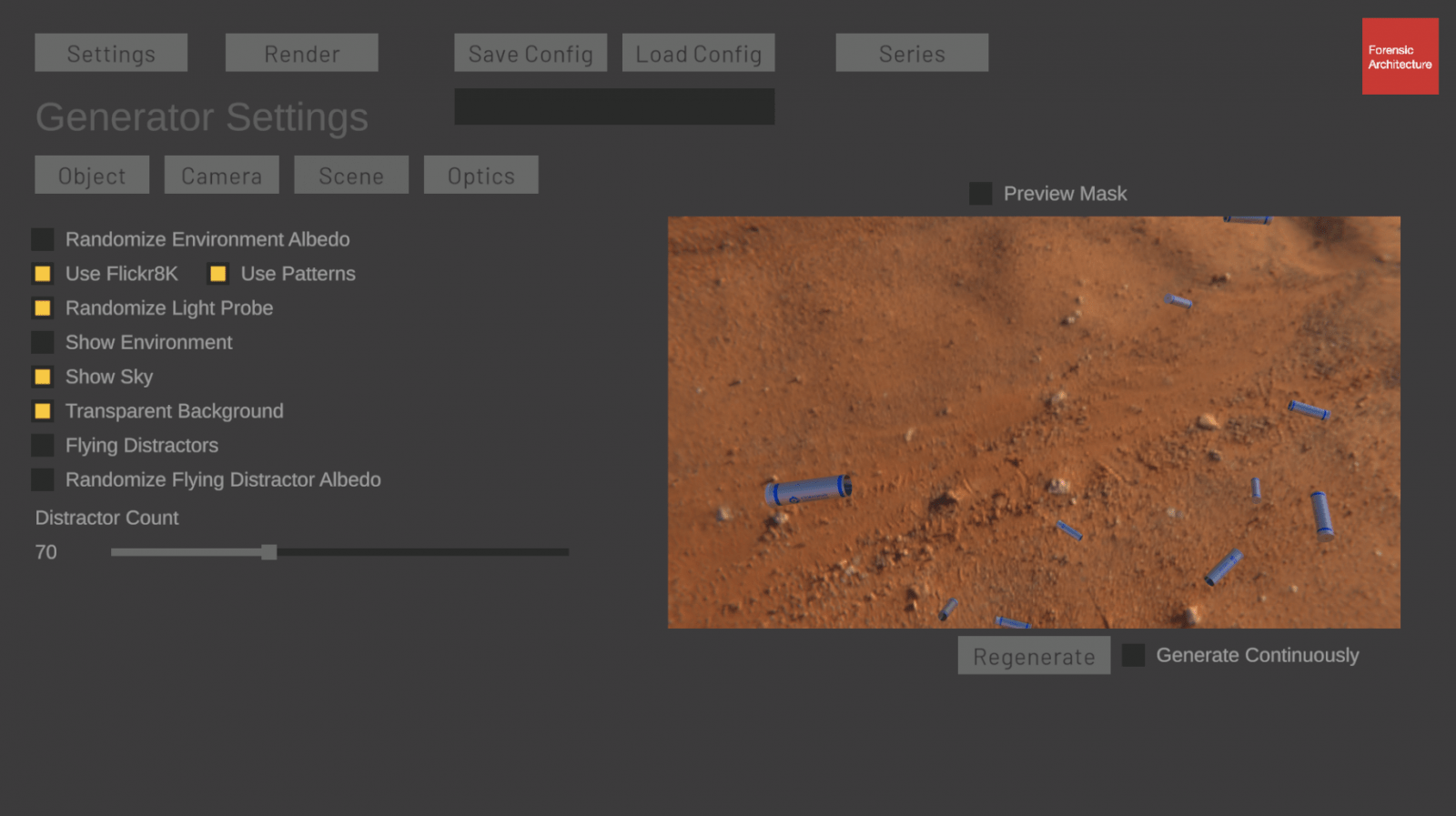

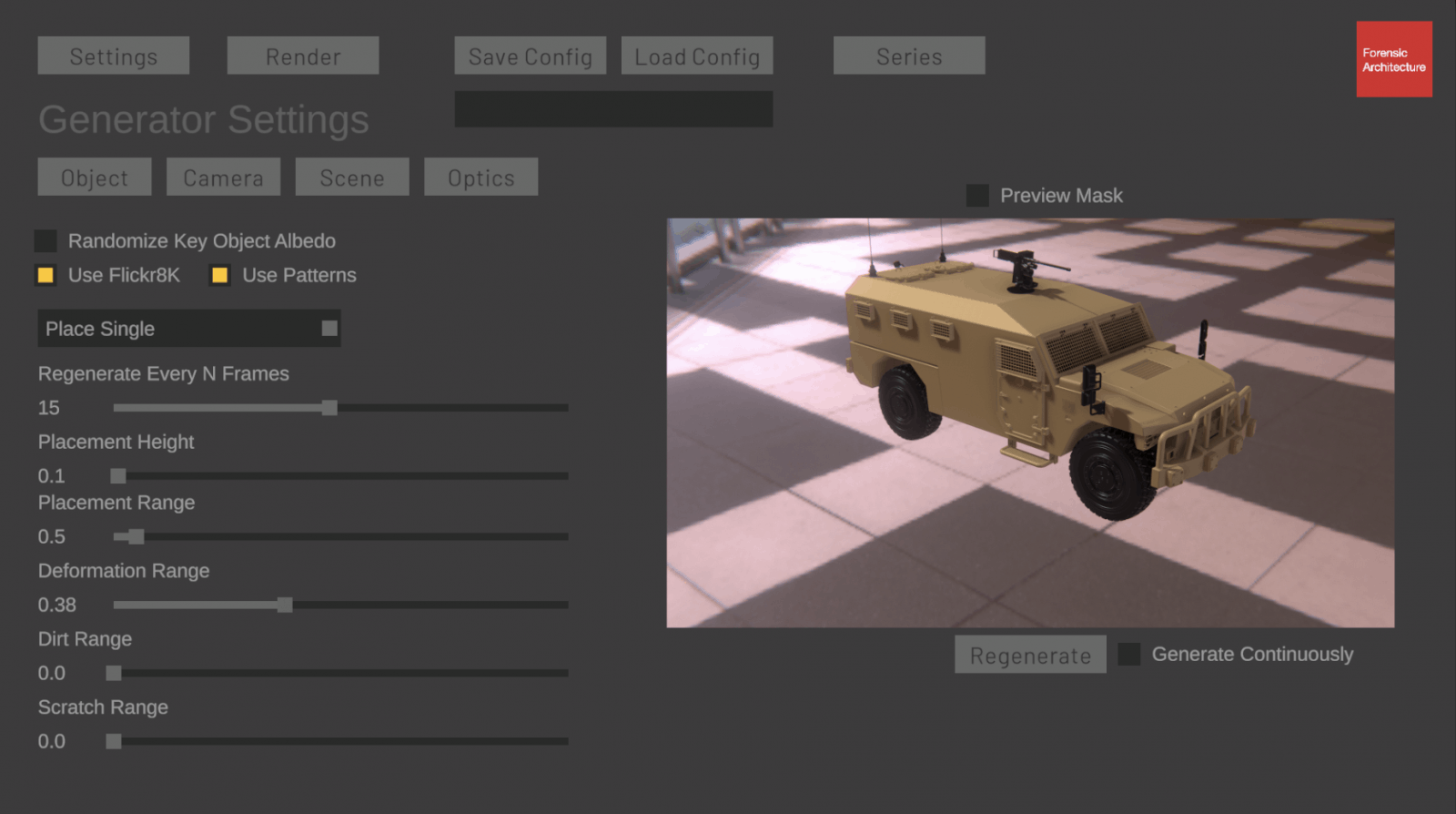

Building on previous work in synthetic data generation done by VFRAME, we have since created a more generalized stand-alone application in Unity, which allows a user to quickly import a 3D model, set generation parameters and quickly export training-ready datasets.

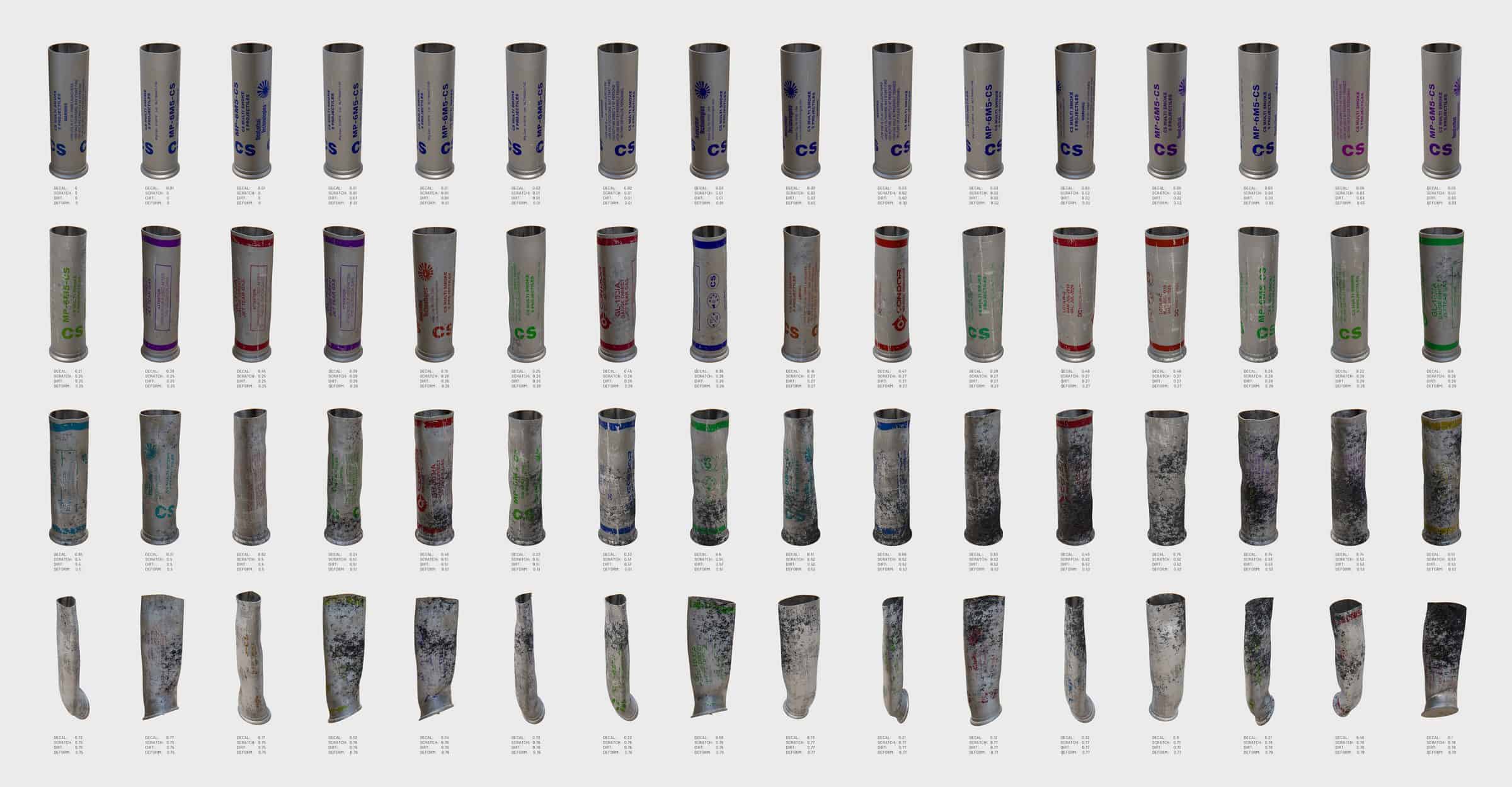

The application allows users to easily import a 3D model together with its materials and textures, as well as other information, such as decal variations, physical deformations and dirt and scratch patterns. The application then renders out a training dataset, randomizing model parameters and transformations, camera angles and lighting and environment properties. The various parameters of synthetic data generation can be controlled via the applications user interface and the results can be previewed within the application window before rendering the full dataset.

This workflow allows us to explore the impact of various aspects of synthetic data generation on the efficacy of training machine learning classifiers. By creating multiple datasets with controlled changes in each generation parameter, we can study a specific parameter’s impact on training.

While this tool is not ready to be open sourced at this moment, we hope that it will mature to a stage where it makes sense to release sometime soon in the coming months.

37-40mm tear gas canisters

The ability to computationally detect appearances of this standard, 37-40mm tear gas canister would expedite human rights research relating to tear gas. Because of its classification as a “non-lethal” munition, there is little documentation from manufacturers regarding sales and export, meaning that accountability activists regularly track sale and use by finding photographic documentation of canisters in footage from protests. As protests are increasingly mediatised, mtriage can help identify instances of tear gas use more quickly by isolating individual image frames within among hours-long videos.

Open source contributors helped us first collect and then annotate approximately two hundred images online depicting 37-40mm canisters. While this set of images is far from fully representative of all the ways that the canister could appear, it serves as a sufficient ‘test set’ to evaluate the accuracy of classifiers that have been trained on synthetic data.

As noted in our recently published technical paper, even classifiers with moderate accuracy and precision can still prove useful when deployed via mtriage. So long as a classifier returns reasonably reliable results (e.g. an upper quartile percentage score when the image does contain a canister, and scoring consistently lower results in images that do not), it can still perform a productive role within a research workflow that includes FA’s own researchers.

Training a classifier for the 37-40mm canister using only synthetic data is a more difficult exercise than for the Triple-Chaser. It is relatively straightforward to induce a classifier to notice the generic shape of the canister; but these naive classifiers will return false positives for many similar shaped objects, which can introduce a range of irrelevant videos into the researcher’s triaging workstation.

Probability thresholds become important as a way to disambiguate images that genuinely contain a tear gas canister from those that hold something similarly shaped or textured.

We are currently experimenting with two approaches in training classifiers for this canister. The first is using the synthetic data generated from our engine in Unity described above, adapting approaches from relevant papers and creatively experimenting in order to improve loss on the control test set of real images. Following Prakash et al.’s approach in this paper, the idea is that extreme training examples at the threshold of recognisability can serve to reinforce the classifier’s capacity to distinguish between the object itself and similarly shaped or similarly textured objects. Combining seemingly outlandish, but still recognisable images with more normative ones, the classifier can detect instances of its object with more nuance.

The second is by way of a new technique in object detection called few shot learning. (This research direction is being pioneered by open source contributor Christopher Tegho.) While traditional detectors suffer from overfitting when trained with small amounts of data, the few shot detector is specifically designed to learn to generalise well from just a few examples of the object in question.

If few shot detection were fully accurate and efficient, it would do away with the need for synthetic data entirely. It would be possible to train a detector using just a small amount of training examples sourced and annotated from the web (a task we undertake in current projects in any case, to construct a test set). In fact, we unsurprisingly found that using extreme synthetic training examples like those depicted in the above section with few shot detection is detrimental to the classifier. Because few shot detectors are learning from just a few training examples, if an extreme example is included during training, the idea of a canister becomes too radical to be effective to identify canisters as they actually appear.

Though in many ways these two approaches to training classifiers are contradictory–one relying on just a few, thoroughly normative training examples, and the other on a high volume of synthetically generated examples across the full spectrum of an object’s recognisability–in our workflow they operate co-constructively as dual components of an mtriage workflow. By running two classifiers on each frame of a video, we are able to get a more precise reading of what may be contained within it.

Analysing audio to detect events

Many videos that contain evidence of misuse of tear gas do not image the culprit canister in any of its frames. Tear gas canisters are small, and videos from conflict zones are often filmed from a perspective that is too far away to capture the object in any detail, if at all.

The legibility of tear gas as an object, however, extends beyond the visibility of the canister that released it. Certain types of tear gas, such as the triple chaser, is often fired from a launcher, emitting an audio signature that might be captured by nearby recording devices, even when they are not targeted directly at the point of fire. Alongside the training of computer vision classifiers, therefore, we are also undertaking research to detect specific audio signatures such as tear gas fire, which we can then deploy with mtriage to identify moments in media that capture them.

The detection of loud noises such as tear gas fire or gunshots is useful for another reason: it can be used to create an audio ‘fingerprint’ of a video, which can then in turn be used to synchronise it with other videos that capture the same audio.

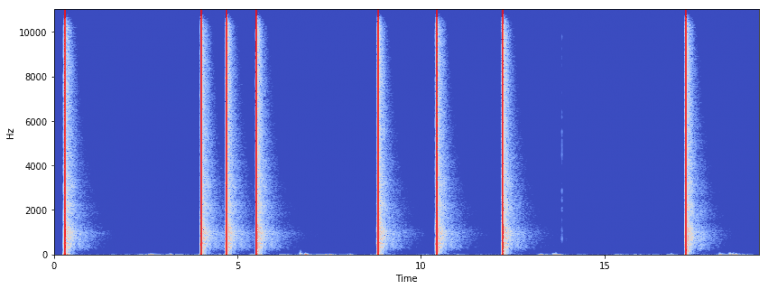

This research direction is being explored by open source contributor James Schull. Onset detection, a classical approach in computational audio analysis that uses no machine learning and produces spectrograms such as those shown above, does a reasonable job of isolating sharp, loud noises in an audio file. It cannot, however, tell us much more about particular noises (i.e. whether it was a gunshot or an explosion). In order to classify the sound, machine learning approaches are the best bet.

To solve this classification problem with machine learning, however, we require a training data set of different sounds–a task that is labour-intensive to source and annotate, and one that may not be possible to complete for certain kinds of sounds if a sufficient number of accessible recordings do not exist. This problem is exactly the same one for which we used synthetic data in the visual domain; and as such, it seems that synthetic audio might similarly offer a solution in this domain. If you are interested in this research, please join the discussion on Discord in the #audio-classifier channel.