For our contribution to the 2019 Whitney Biennial at New York’s Whitney Museum of American Art, we developed a machine learning and computer vision workflow to identify tear gas grenades in digital images. We focused on a specific brand of tear gas grenade: the Triple-Chaser CS grenade in the catalogue of Defense Technology, which is a leading manufacturer of ‘less-lethal’ munitions.

Defense Technology is a subsidiary of the Safariland Group, an American arms manufacturer and dealer that is owned by Warren Kanders. Kanders is also the vice chair of the Whitney’s board. You can read more here about Safariland and the film we made together with Praxis Films for the Biennial.

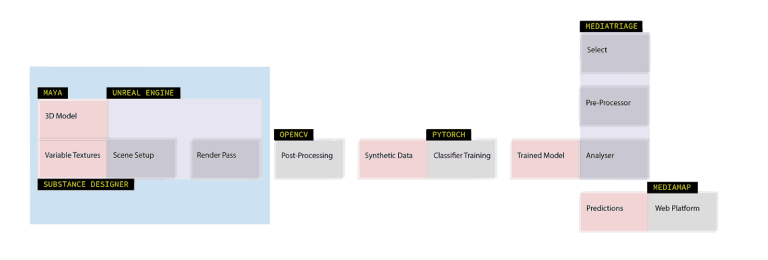

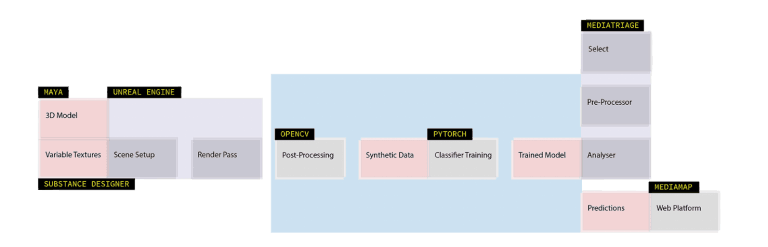

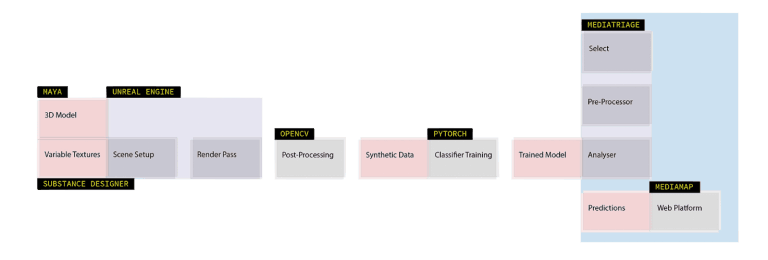

Developing upon previous research, we used ‘synthetic’ images generated from 3D software to train machine learning classifiers. This led to the construction of a pragmatic end-to-end workflow that we hope can also be useful for other open source human rights monitoring and research in general.

Synthetic Data - Rendering Training Sets with Unreal Engine

Deep learning is a state-of-the-art technique for classification tasks in computer vision, but the development of an effective classifier usually requires a training set composed of thousands of annotated images. Not only is the annotation of real-world images is costly and laborious, but building such a set also relies on there being enough images available in the first place and that they are appropriately licensed.

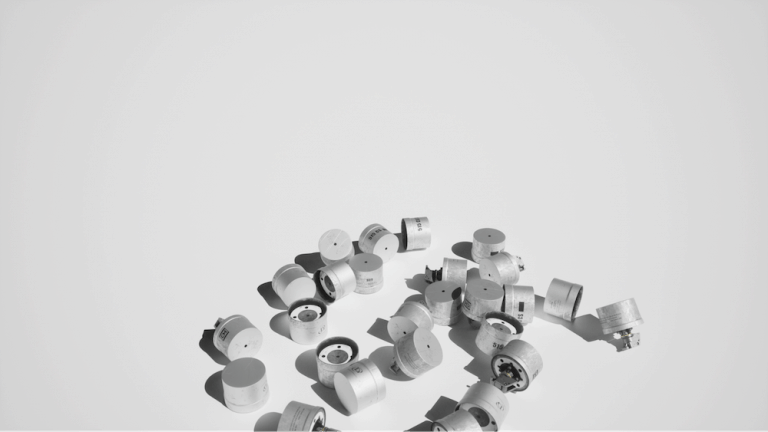

In our investigations, relevant images of a given object or scene are often in short supply. This is a widespread problem the field of open source research: often it is hard to find images. We found fewer than a hundred images of Defense Technology’s Triple-Chaser online; in order to train an effective classifier, we need around a thousand.

To bridge this ‘data gap’, we use datasets made of simulated or ‘synthetic’ images to train machine learning classifiers. We are not the only ones to consider such a possibility: NVIDIA and Microsoft are two dominant names who have conducted similar research.

Here is a non-exhaustive review of papers and posts on the state of recent research:

- AI in Unreal Engine: learning through virtual simulations, a blog post by NVIDIA.

- Training Deep Networks with Synthetic Data: Bridging the Reality Gap by Domain Randomization, a paper by NVIDIA.

- Falling Things: A Synthetic Dataset for 3D Object Detection and Pose Estimation, another paper by NVIDIA.

- Synthetic Computer Vision, a Github repository listing a range of synthetic projects.

- NVIDIA Deep learning Dataset Synthesizer, a Github repository by NVIDIA.

- SYNTHIA Dataset, a dataset of synthetic images for automotive learning.

We have developed a system to generate synthetic datasets using Epic’s Unreal Engine. We could have worked in a number of 3D frameworks, but we chose Unreal for a number of reasons:

- Purpose-built for dynamic perspective. In previous research, we built an experimental framework in Maxon’s Cinema4D for this same purpose. Cinema4D is a general purpose modelling and animating suite, similar in principle to Blender or Maya. While there are certain advantages using it, when using it we found that we were required to script many camera, texture, and model variations from scratch. Indeed, many of the functions we require for a synthetic data generator– such as domain randomization, parametric textures, and variable lighting– are more common in game development than in animation. Unreal offers a lot of relevant functionality ‘out of the box’, and has a rich online support community.

- Powerful visual scripting. Unreal’s node-based visual scripting language blueprints is more accessible than code for the architects, game designers, and filmmakers in our office. Using blueprints for custom functionality increases the resources we can bring to bear on the project of generating synthetic data, and also makes the system more pragmatic to develop and maintain.

- Full source code access. Unreal’s source code is available in its entirety (although you need an Epic Games account in order to view Github repository.) It’s not exactly ‘open source’, as the license under which it is released does not allow for redistribution or adaptation, but source code access allows us to understand performance bottlenecks, and to see the full scope of what is possible in the software.

- Real-time raytracing. Unreal’s capability for real-time rendering is the cherry on the top of Unreal’s suitability for synthetic data generation. The real-time quality of rendering means that, in contrast to our workflow using Cinema4D, there are much shorter turnarounds in the ongoing conversation between generating and training phases. And as we have access to NVIDIA RTX 2080 Ti graphics cards, we were excited to experiment with real-time raytracing in Unreal Engine 4.22.

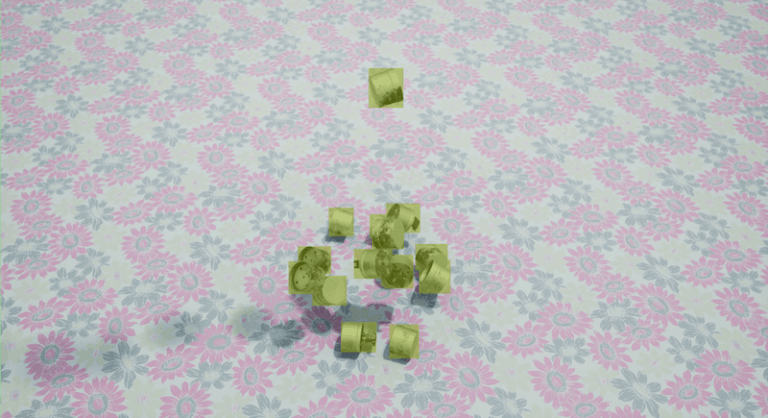

To refine our visual understanding of the Triple-Chaser beyond the images we found online, we reconstructed the object, using photogrammetry in a software called Reality Capture, from several 360 degree video clips that were sent to us by activists who had direct access to Triple-Chaser canisters.

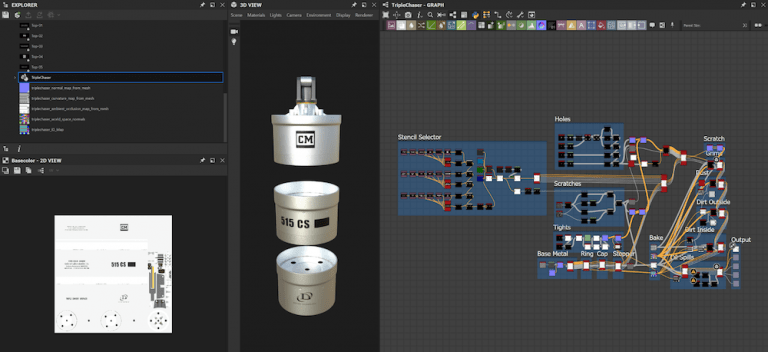

Referencing this model alongside found photographs, we used Adobe’s Substance Designer to create parametric (that is, variable along certain axes) photorealistic textures for the canister. The texture was designed based on the photogrammetric models and our other research. In the texture we have created, each of the following aspects of the texture can be either continuously or discretely modified at render time using the Unreal Substance plugin:

- Labels (color and content). The branding that appears on the Triple Chaser varies around the world.

![Triple Chaser Brandings - Different brandings of the Triple Chaser appear on the canister in different locations. (Forensic Architecture)]() Different brandings of the Triple Chaser appear on the canister in different locations. (Forensic Architecture)

Different brandings of the Triple Chaser appear on the canister in different locations. (Forensic Architecture) - Weathering and wear effects. Using mask generators in Substance, we simulated full-material weathering effects such as dust, dirt, and grime. We also physical deformations such as scratches and bends.

![Substance screenshot - Weathering and wear effects are parameterised in Substance Designer.]() Weathering and wear effects are parameterised in Substance Designer.

Weathering and wear effects are parameterised in Substance Designer.![Canisters 2 - (Forensic Architecture)]() (Forensic Architecture)

(Forensic Architecture)

In addition to parameterising the model’s texture, we programmed the following variables in a scene in Unreal using blueprints:

- Camera position randomisation.

- Camera settings such as exposure, focal length and depth of field.

- Image post-effects such as LUT’s for color correction variations, film grain, and vignette.

Using this pipeline, which begins with an accurate 3D model and renders it via seeded randomizations in texture, environment, and lighting, we were able to produce thousands of synthetic images depicting the Triple-Chaser.

The system we describe above renders synthetic data only for the Triple-Chaser. However, we have designed and developed the system with generalisation in mind. In the coming months, we aim to create a system that renders machine-learning-ready, annotated datasets for any given 3D model. This development is part of a larger project, which aims to train effective machine learning classifiers for a range of objects whose tracking is of interest to human rights and OSINT investigators. These include objects such as chemical weapons, tear gas canisters, illegal arms, and particular kinds of munition.

The results for these investigations will be made public and, where appropriate, the assets used will be made open source. New codebases and material will be published at forensic-architecture.org/subdomain/oss.

Machine Learning with Synthetic Data

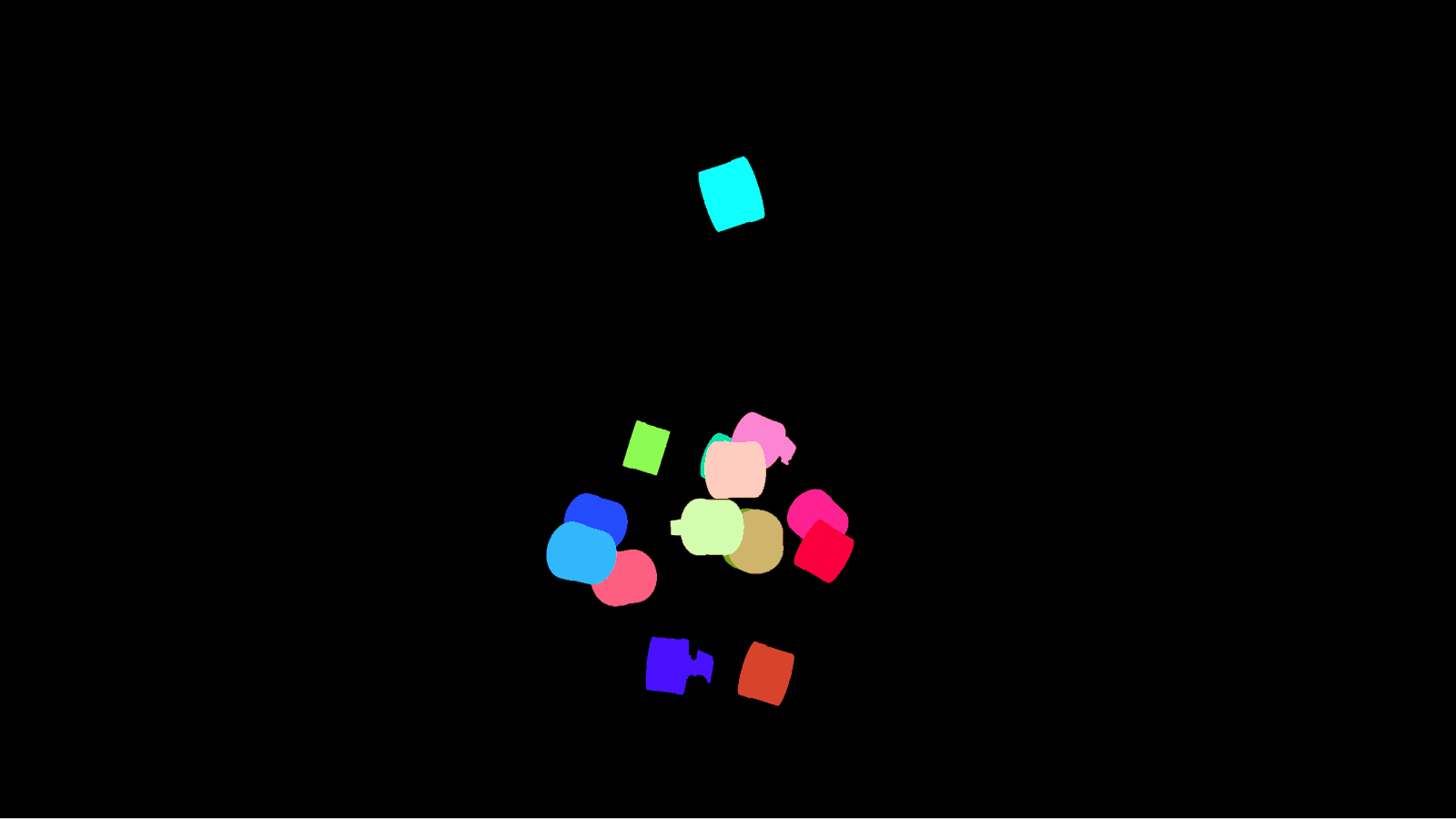

A major benefit of using synthetic training sets in a machine learning workflow is that we essentially get image annotations for free. When images are rendered from a 3D scene, the pixel masks of the object is information that Unreal already contains, and as such we can easily create an extra render artefact that contains the information relevant for annotation. The annotation of synthetic images is a simple two-step post-processing process:

- Render out an additional ‘mask’ for each image that designates which pixels represent the object of interest.

- Run a script to generate an appropriately-formatted annotation by comparing the original image and its mask. Annotations are generated in Supervisely format, a JSON object that contains bounding box coordinates and a base64 string representing the bitmap mask. The Supervisely format is easily transformed to a range of other common annotation formats, such as KITTI, COCO, or PASCAL.

Training effective classifiers with synthetic data remains a work in progress. Here is an overview of some approaches we have tried so far:

Transfer learning. As many models come ‘pre-trained’ on standard sets of photographs, transfer learning is a useful technique for synthetic data. It affords the benefit of synthetic training data without relying entirely on it, which risks overfitting to synthetic data, ultimately meaning that the classifier will have no luck classifying real-world images. Even though the distribution of standard datasets does not always align with that of our synthetic datasets, this approach has been promising.

Combined training: synthetic and real. In a phenomenon known as catastrophic forgetting, networks tend to quickly lose information from previous trainings when they are trained on new and different data. One technique to counteract this phenomenon is to mix synthetic images containing the target object with real-world photographs that contain other objects. Training the network to identify both kinds of object (one class that has real-world training data, and the other that has synthetic training data) seems to allow it to retain an ability to process real-world images while also learning the specific features of the object that are denoted in more detail by the synthetic images. Once the network is trained, we can essentially discard predictions of the real-world label, and only focus on the predictions for the synthetic label.

Reverse discriminator. A discriminator module is more commonly used when training networks to generate data, rather than for those that classify it as ours does. For example, discriminators have proven very effective in instances where the goal is to train a network to decide whether an image is real or fake. When training with synthetic data, however, we effectively want to forget that distinction, rather than learn it. We can use a reverse discriminator to train the network to become worse at distinguishing data’s origin, i.e. the aspects of an image that make it either clearly a photograph, or clearly synthetic.

To date, we have tried transfer learning using state-of-the-art object detection algorithms such as Mask R-CNN, YOLOv3, and UNet VGG. As noted above, this research is ongoing, and we do not yet have comprehensive metrics regarding which architectures are the most effective. In addition to bounding box detection and semantic segmentation, we are also experimenting with Optical Character Recognition (OCR) algorithms, which can be used to predict a canister’s manufacturer after it has been detected in an image.

Mtriage: Streamlining Web Scraping and Computer Vision

Although an effective classifier is interesting to play with in laboratory conditions, it is only useful for open source research if there is a structured workflow to deploy it on public domain data. In previous research, we have written scripts to crawl platforms for particular objects of interest or keywords that are bespoke and investigation-specific. While this approach works well, it requires dedicated time and technical labour.

The bulk of media that we use in investigations comes from familiar platforms: Youtube, Twitter, Facebook. These platforms have robust APIs, but of course they differ in various ways: in the authentication they require, the search parameters they make available, and the media formats they support. What we want is a way to download and analyse content from a range of major platforms in a structured way using the same set of search terms.

To deploy a computer vision classifier, for example, we want to scape each platform for videos and images, and also split every video into individual frames, since classifiers generally operate on single images. (There are classifiers that take video as input, but they are not as well-researched or robust as image-based classifiers.) We then want to apply the classifier across all images and frames that have been sampled, and predict the likelihood of a canister in the image. We then want to run optical character recognition on the images that have a confident prediction for canister, to see whether there is text visible. If there is text, then we want to compare it with phrases that are indicators of the canister’s manufacturer.

This workflow can be described more generally and become a process that is useful during OSINT investigations at large. We want to scrape media from platforms, leveraging existing search functionalities, and subsequently run one or more computational passes on the results. The computational passes tell us something about the media, which is useful either to a human researcher or as input to some other computational pass. Although in the Safariland investigation we are only looking to analyse visual data using computer vision classifiers, in other investigative contexts we are interested in analysing, for example, media audio to locate distinctive noises such as a gunshot, or analysing the media metadata from independent websites.

Therefore, we developed a command-line tool, mtriage, as a framework to scrape and analyse public domain media in the way described above. Mtriage is a framework that orchestrates two different kinds of components:

- Selectors, which search for and download media from various platforms.

- Analysers, which derive data from media that has been retrieved by a selector. See Mtriage on Github

Mtriage is available under the DoNoHarm license on Github. Please note that it is currently (May 2019) in active development at Forensic Architecture. We are incorporating new selectors and analysers into the framework as we develop them for investigative contexts here. For more detail and instructions on how to use mtriage in your own research, visit the Github page linked above. We welcome community pull requests and new contributors.