Methodologies

Location

Publication Date

This post was written by Frank Longford, who worked with Forensic Architecture during a six-week data science fellowship from Faculty (formerly ASI).

Increasingly, Forensic Architecture uses machine learning techniques in a range of ways to enhance our data-gathering capacity during open source investigations. In many contexts, the abundance of potentially relevant data, and the requirement to search through that data, presents a significant logistical challenge to investigators. To this end, we are experimenting with the use of machine learning tools to channel investigative resources effectively.

One way to constructively pre-process open source video material using machine learning techniques is to ‘flag’ videos that are likely to contain objects of interest. Using algorithmic classification, we can index and sort media content. For example, suppose we are interested in determining which frames within a given video capture military vehicles, such as tanks.

Tanks are, generally, evidence of an escalated level of violence, and an indication that a nation-state may be involved in a given conflict. They also have a relatively distinctive shape and size, which renders them more easily detectable to algorithmic classifiers.

In order to train machine learning classifiers to detect a given object (the ‘object of interest’) in an image or video frame, a large dataset of images of the object of interest is usually necessary.

Images of the objects of interest in the context of human rights violations (for example military vehicles, or chemical weapons) are often rare, however. Forensic Architecture has been experimenting with how to supplement a dataset of real images with additional ‘synthetic’ counterparts.

Generating tanks

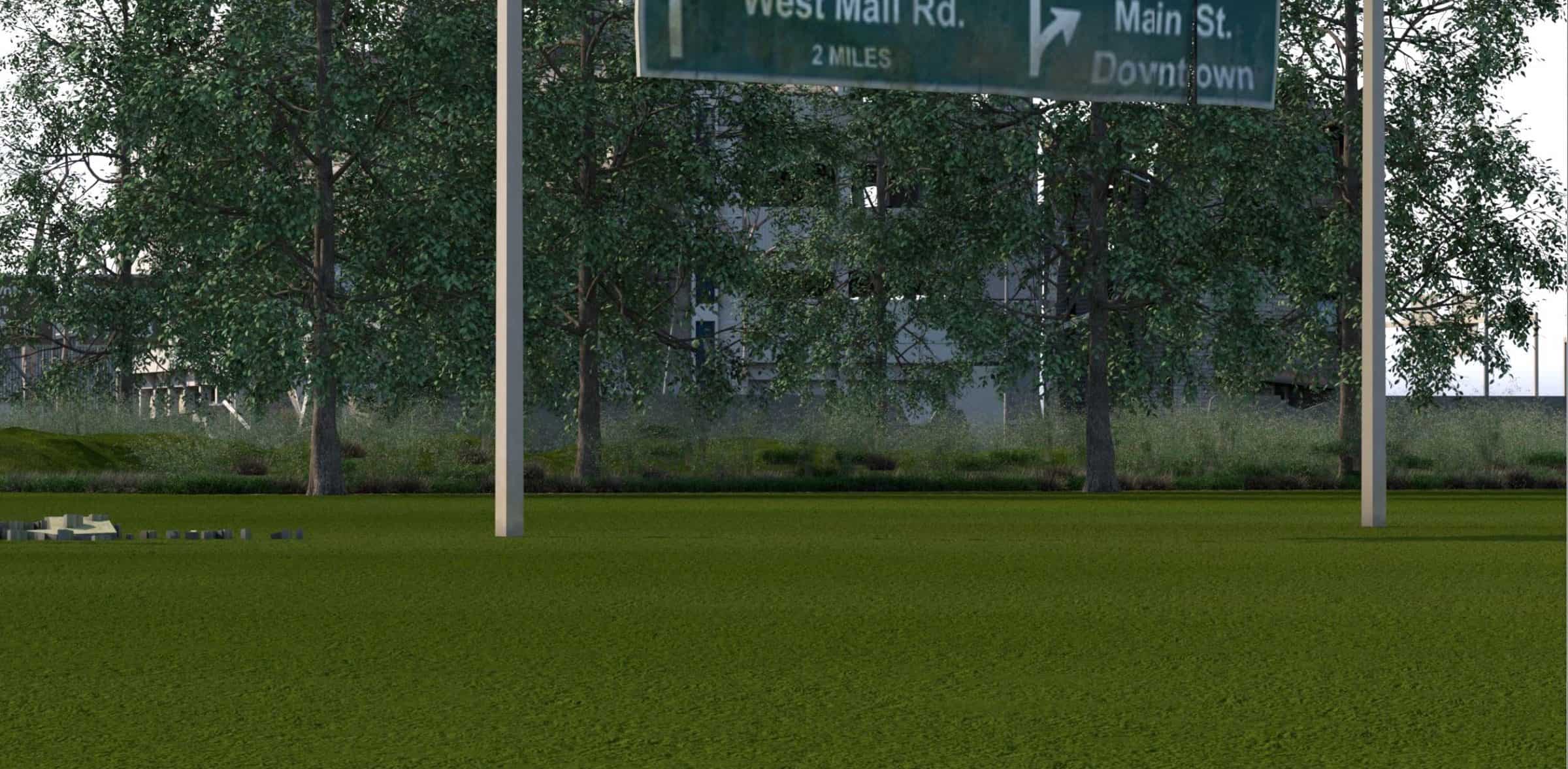

When we lack a training dataset for a particular object of interest, we can supplement it by digitally modelling the object and ‘photographing’ it in a simulated environment. Parametrising the object and its environment allows for infinite variations of output data. Variation is an important characteristic of training data, to ensure that classifiers do not overfit.

Pipeline Overview

Our pipeline is set up in MAXON’s Cinema 4D (C4D). This 3D modelling and animation software gives us control over animation parameters through its node-based editor XPresso. The node-based approach to parametrising various variables can be replicated in other software with similar pipelines (Blender, Unity etc.). We have developed a modular file set-up in which we can easily interchange objects of interest to create synthetic datasets. For this case-study we used a detailed tank model as an object of interest, and simulated it across both rural and urban environments. We then tested how render settings influenced the classfication scores of the rendered images.

Environmental and Object Settings

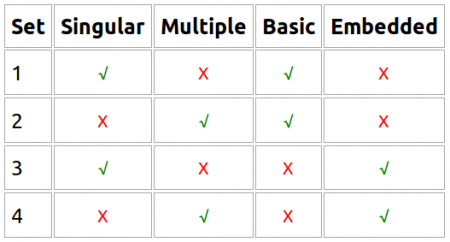

Basic

Singular

Embedded

Multiple

We generated several variations of synthetic datasets, containing singular or multiple tanks, in either a ‘basic’ or ’embedded’ environment. A basic environment has no urban infrastructure or natural features other than ground and sky. An embedded environment generates variation in the surrounding context. The table below outlines the set of combinations of these conditions that we used to test the efficacy of rendered images.

These conditions are chosen to represent the range of ways in which a tank is could appear in a given image. The position of the camera is varied around the object, and throughout the duration of the camera’s movement, 2D images are rendered at regular intervals. These images are compiled into a synthetic dataset.

In order to evaluate whether the synthetic dataset is useful in a machine learning context, we tested the performance of an open-source Convolutional Neural Network (CNN) on them.

To benchmark, we used a ‘MobileNet’ CNN, which has been pre-trained on the ImageNet dataset, and already includes a label for ‘tank’ in its default 1000 classes. Further information about image classifiers and a good introduction to CNN architecture can be found here.

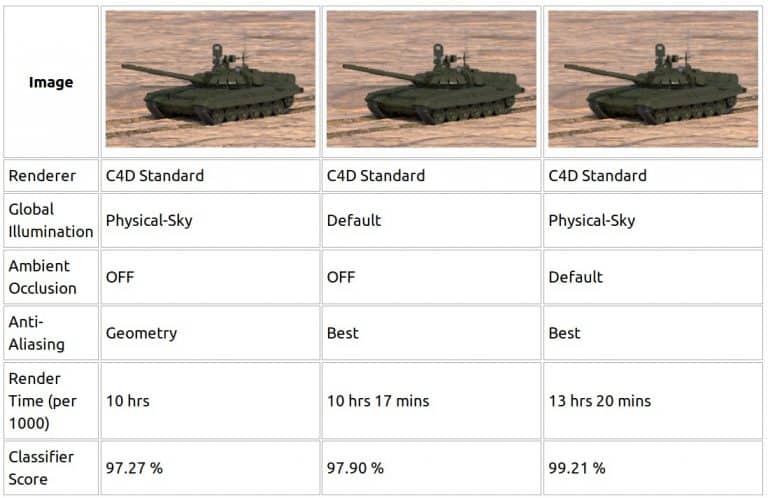

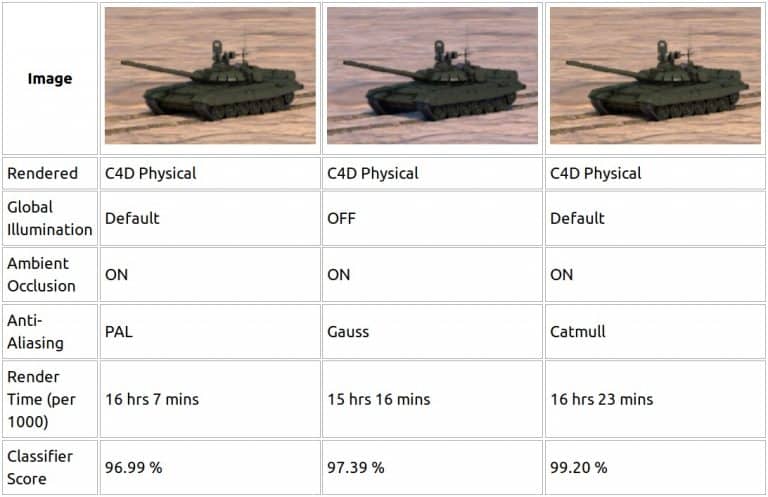

We used Maxon’s Cinema4D software to generate the environments from which we rendered images. To see how the software’s render settings might affect a classifier’s performance, we took a single, real image of a tank that returned a strong prediction score (98.5%) from the classifier, and modelled a replica of that image’s contents and environmental conditions.

Real

Synthetic

The same synthetic image was then rendered multiple times, according to different render settings. The rendered images were then classified using the MobileNet CNN. A brief overview of our findings is shown below. The classifier’s score on the real image, 98.5%, is the benchmark for the scores achieved on synthetic images.

Simple tests like these to begin to guide our later, more complex processes for rendering synthetic image datasets.

Performance of pre-trained classifiers (metrics)

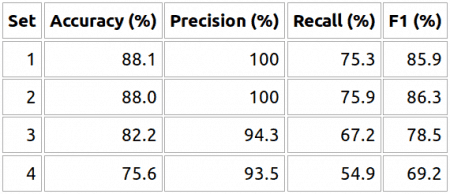

There were observable differences in classifier score when we altered either image content or render settings. Below are the best render settings for each set 1 through 4. (The table with checks and crosses higher up in this post describes how each set differs).

The introduction of embedded environments (sets 3 and 4) introduces significant variation in MobileNet’s performance. In particular, the recall scores for images containing multiple tanks in an embedded environment are much lower than any other performance measure. This is significant, since recall scores are inversly proportional to the number of false negative results, or tanks ‘missed’ by the classifier. It therefore seems images contaning multiple objects with varying environmental settings are much more challenging for our image classifier to process. This is most likely because in those images, the ‘object of interest’ does not reflect the form of the real images that MobileNet was trained on.

This indicates that it might be possible to improve classifier performance by retraining the outer layers of the CNN with synthetic image datasets such as sets 3 and 4 above.

'Feature score' clustering

Using synthetic data to train classifiers is likely to introducing unforeseen biases or ‘overfitting’. We investigated the ‘appropriateness’ of each dataset by analysing them against all of the ‘feature scores’ MobileNet predicted (as opposed to focusing on just the ‘tank’ label).

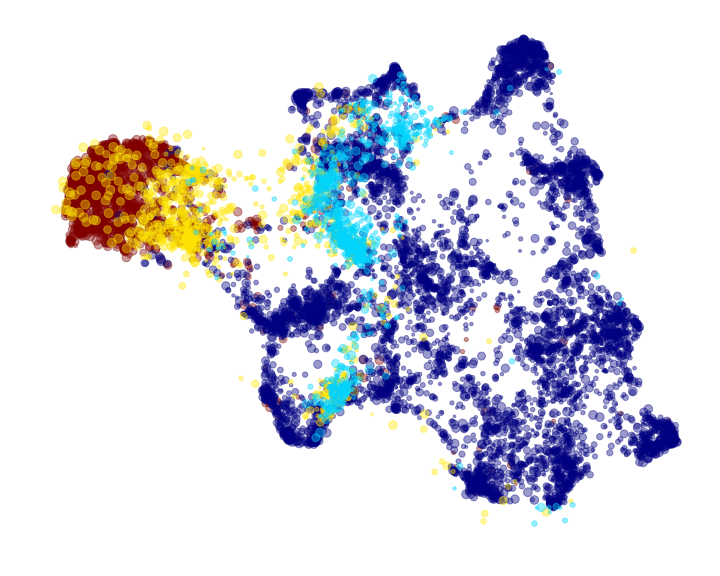

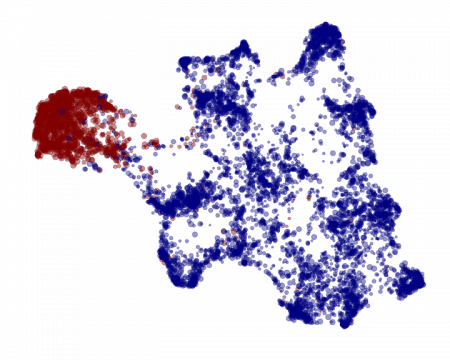

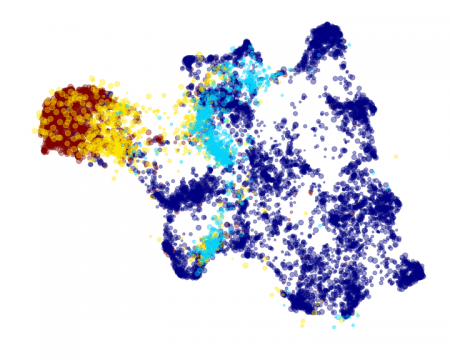

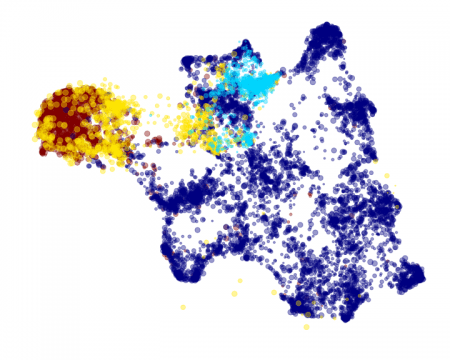

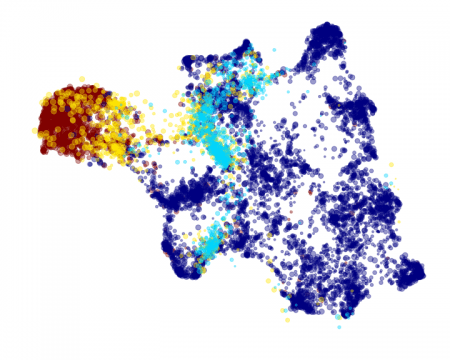

We clustered the vectors returned from the classifier. Each data point represents an image, and the distribution of points reflects the similarity of the classifier’s predicted content.

In the example below we trained an UMAP clusterer on the feature vectors taken from a dataset of real images. The clear separation of images we know to contain tanks, shown in red at the top left, and those we know do not, shown everywhere else in blue, signifies good classifier performance.

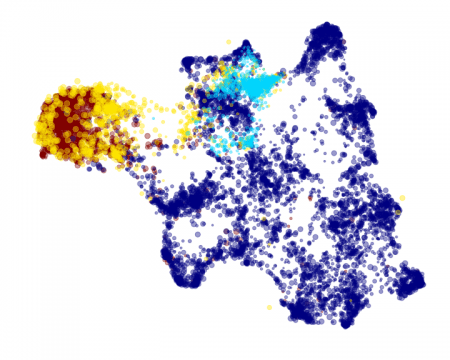

Overlaying each synthetic dataset reveals the distribution of simulated images contaning tanks in yellow and not containing tanks in cyan. An appropriate distribution would therefore show the yellow data points overlapping the red data points and the cyan data points overlapping the blue data points.

A quick look at each graph generally reveals good overlap between the synthetic and real images. However, in datasets 1 and 2 there is a slight systematic offset in the overlap of the synthetic tank (yellow) images and real tank (red) images, which may signify an artifical bias.

Interestingly, this bias appears to be decreased in the embedded datasets (3 & 4) and the overlap of synthetic and real images containing tanks becomes progressively more stochastic when either including multiple tanks or environmental embedding. This may signify that MobileNet has not been trained on images with similar content.

Therefore retraining using images taken from datasets 3 or 4 is likely to be more effective than datasets 1 and 2.

Next steps

We are now attempting to use the datasets that we have rendered to train classifiers, using some of the below ideas that we have to improve our synthetic rendering pipeline:

- Render datasets for other objects of interests. If you are a human rights organisation with a use case or otherwise in need of a dataset, please do not hesitate to reach out to us.

- Conduct further tests on the impact of camera sensor variations (motion blur and depth of field settings) and run more varied scenes through different render setting variations.

- Adapt the scene to other modelling and animation software such as Blender or Unity, and test the impact of render settings across other renderers.

- Experiment with post-processing the images to make them more appropriate for classifier use. For example, there are many interesting techniques using Generative Adversarial Networks (GANs) that could make rendered images more viable for training.

Appendix

Note on Classification vs Detection

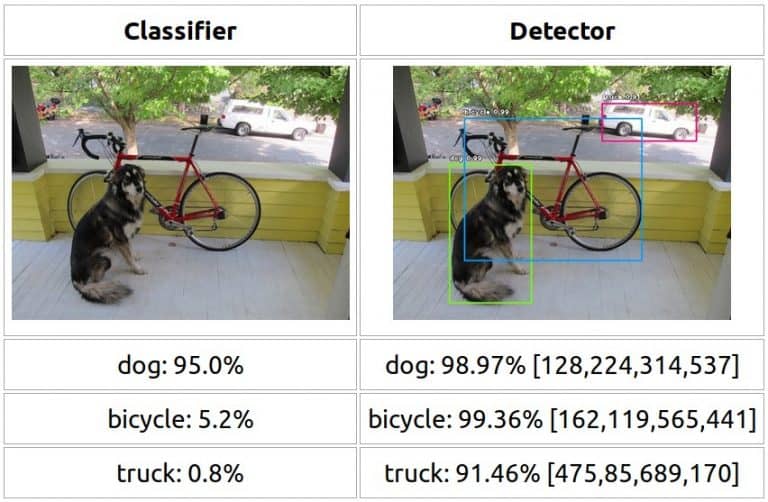

CNNs process images and return output probabilites that correspond to labels that they have been trained to detect. Whereas image classifiers only return a single probability per available label, object detectors can return more information about the multiple places that an object could reside within a single image.

In the example below, the image classifier returns a single set of predicitions whereas the object detector identifies the location of each seperate object, as well as returning its most likely prediction score:

For this study we defaulted to using image classifiers since there are a larger number of open source variants available that have already been pre-trained on images of tanks. This is largely thanks to the ImageNet online 1000 class image recognition challenge including tanks as one the requisite labels.