In Partnership With

- Bellingcat

- Amnesty International

- Open source contributors

Additional Funding

Collaborators

This project originally premiered at the exhibition, Uncanny Valley: Being human in the age of AI at the de Young Museum in San Francisco.

The growing field of ‘computer vision’ relies increasingly on machine learning classifiers, algorithmic processes which can be trained to identify a particular type of an object (such as cats, or bridges). Training a classifier to recognise such objects usually requires thousands of images of that object in different conditions and contexts.

For certain objects, however, there are too few images available. Even where images do exist, the process of collecting and annotating them can be extremely labor-intensive.

Since 2018, Forensic Architecture has been working with ‘synthetic images’—photorealistic digital renderings of 3D models—to train classifiers to identify such munitions. Automated processes which deploy those classifiers have the potential to save months of manual, human-directed research.

Forensic Architecture’s ‘Model Zoo’ includes a growing collection of 3D models of munitions and weapons, as well as the different classifiers trained to identify them making a catalogue of some of the most horrific weapons used in conflict today.

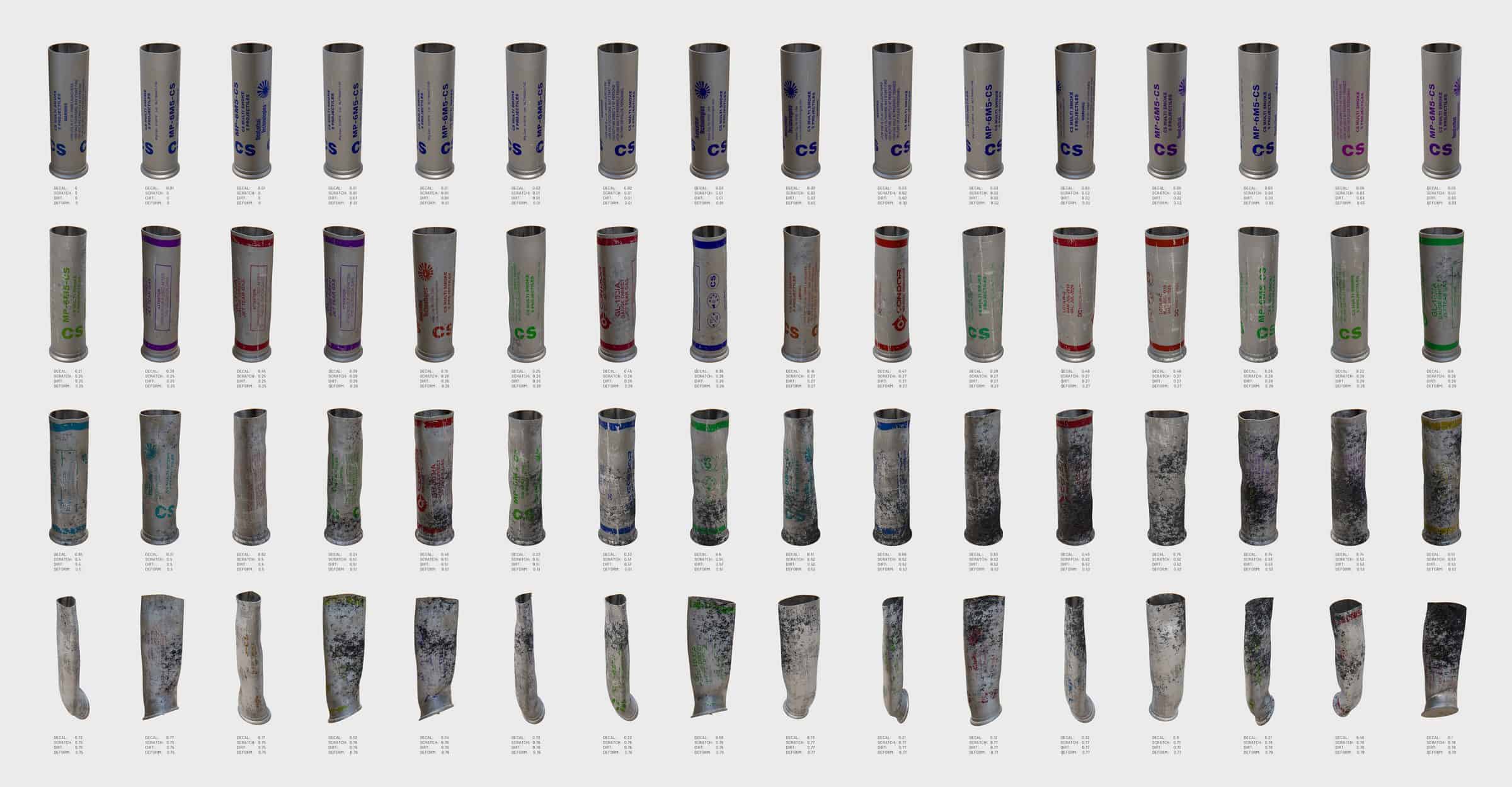

37-40mm tear gas canisters are some of the most common munitions deployed against protesters worldwide, including places such as Hong Kong, Chile, the US, Venezuela and Sudan. Forensic Architecture is developing techniques to automate the search and identification of such projectiles amongst the mass of videos uploaded online. We modelled thousands of commonly found variations of this object — including different degrees of deformation, scratches, charrings and labels — rendered them as images, and used these images as training data for machine learning classifiers.

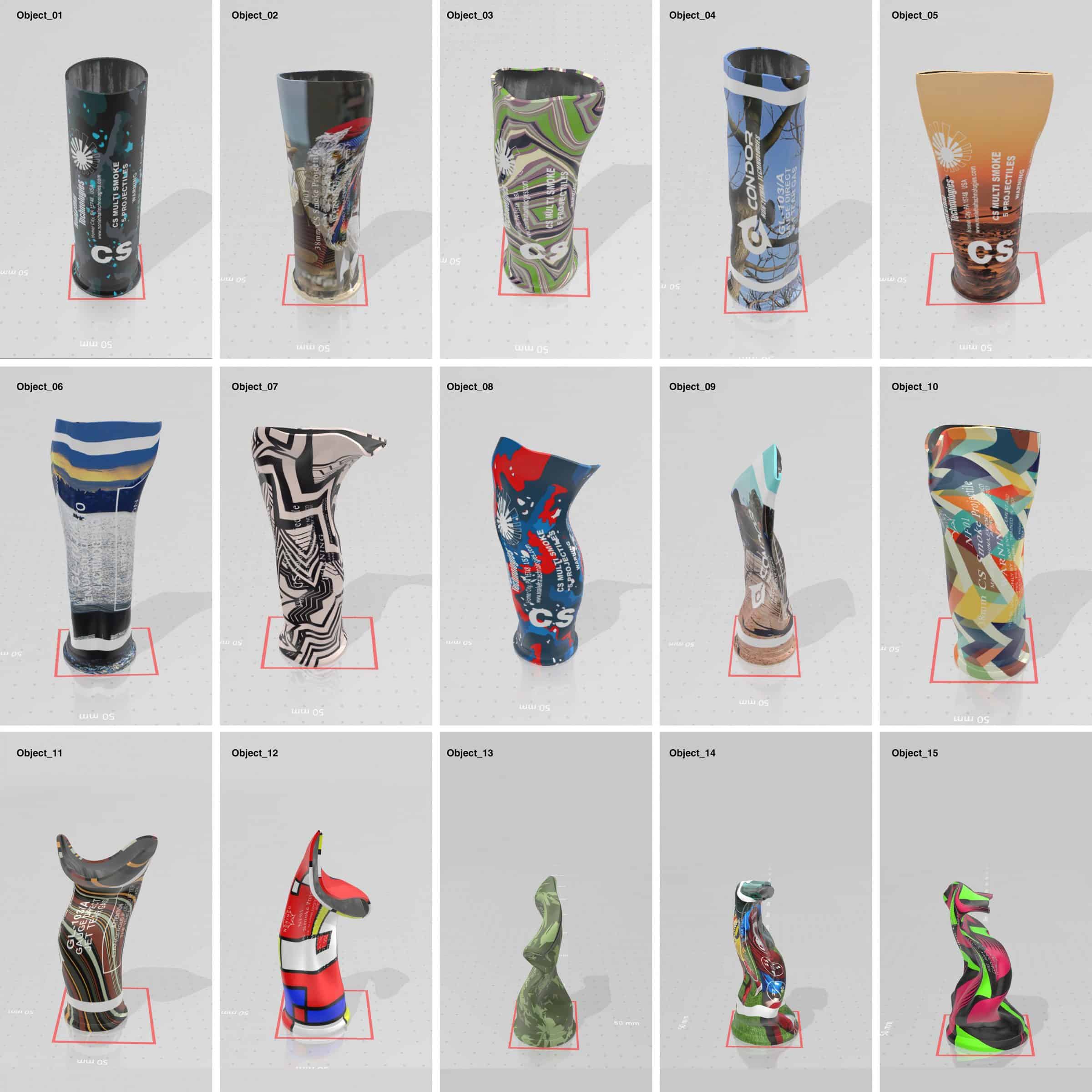

Machine learning classifiers that use rendered images of 3D models, or ‘synthetic data’, can be made to perform better when ‘extreme’ variations of the modelled object are included in training examples. In addition to realistic synthetic variations, we textured a model of the projectile with random patterns and images. Extreme objects refine the thresholds of machine perception and recognisability, helping the classifier better recognise their shape, contours, and edges.

Click here to read more about the project on our open source pages